ZPE Takes Out-Of-Band (You Guessed It) To The Cloud

The basic idea behind out-of-band networks is simple: Don't put management interfaces on a public subnet. ZPE's products do this in a very modern way- but they also provide a lot more security and flexibility than a run of the mill KVM solution.

Security Field Day

This article was inspired by a ZPE presentation I attended as a part of Gestalt IT’s Security Field Day 7 event. Recordings of the ZPE session is available at techfieldday.com/event/xfd7/.

I also wrote a little more about XFD7 in general here.

IPMI - System Setup And Management Via That Ethernet Port That's All By Itself On The Back Of Your Server

Generally speaking there are two, equally-important-yet-completely-separate environments one has to reckon with in order to set up computer systems (especially enterprise-level systems): The setup of the system itself, and the setup of the OS/Application stack that will run on it. The setup of the system itself is quite sensitive, as it is literally the bedrock of the server. It includes things like: instructing the boot process, authorized cards and devices that may be connected/installed, and firmware for all the components that make up the system. This all is ordinarily accessed through the Intelligent Platform Management Interface (or "IPMI"), which might have a company specific name. Examples include iDRAC (from Dell) or iLO (from HPE).

Note: I'm going to keep using the terminology "IPMI" for simplicity's sake. There are PLENTY of other use cases out there for this type of traffic, as well as other names that would apply. So if you're thinking of your organization's "admin ports," or "maintenance ports," etc.. that's probably network traffic that can be thought of in the same vein as IPMI.

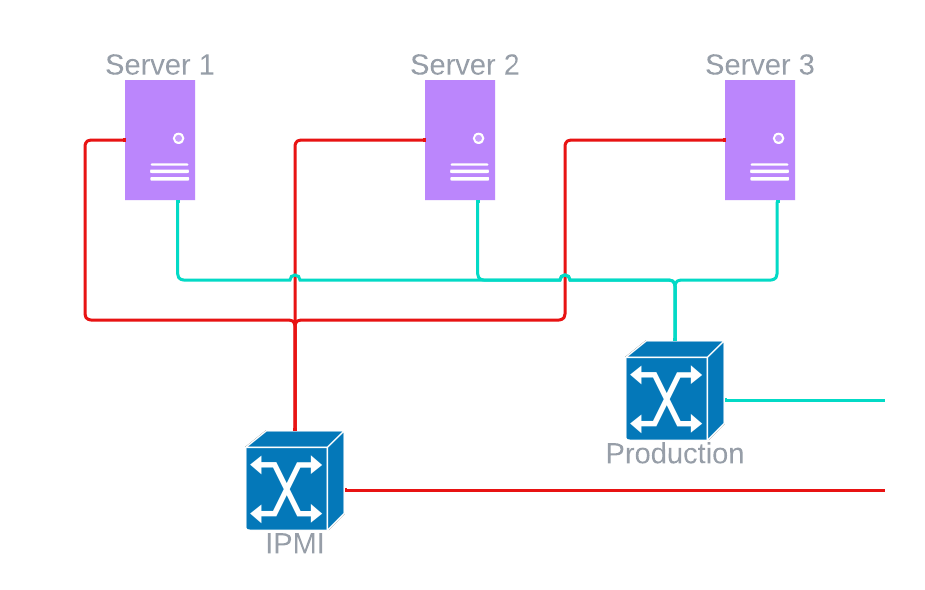

What's important about IPMI ports is that they are (ideally) managed "out-of-band," meaning they are managed in a way that keeps them completely independent of the OS/Application stack that the system is running. The OS doesn't even need to know that IPMI services exist. In the olden days, this meant physically plugging a keyboard, monitor, and mouse into the back of the computer for initial setup and OS install. These days it's done via ethernet- but the goal is still the same: isolate the IPMI traffic from the system traffic, like so:

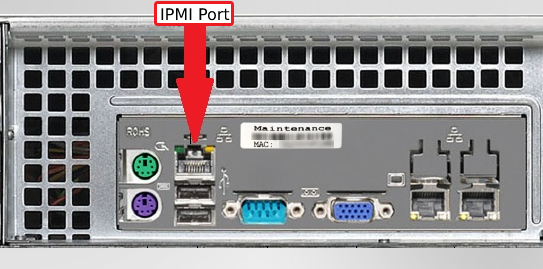

On many servers, the IPMI ethernet port is kept literally separate from the 'system' ethernet ports. This is primarily done because the ports connect to different subsystems on the actual motherboard, but I also believe that it is done to reinforce the idea that these networks need to be as separate as possible. Manufacturers recognize the danger posed by these ports being made public, with Dell stating that "DRAC's are intended to be on a separate management network; they are not designed nor intended to be placed on or connected to the Internet."

In the example below you can see the IPMI all by itself (#PagingEricCarmen) on the left, where the 'normal' ethernet ports are grouped together, off to the right:

The reason for this extreme separation is simple: protect the server subsystems from attacks that could come from being exposed to traffic on any public network. In a lot of datacenters this would be done with separate VLANs (or even fully physically separated switching environments). If we wanted to add detail to our purple-server diagram above, the IPMI ports could be placed on a non-routable 192.168.x.x subnet with the 'production' system ethernet ports assigned entirely different (and routable) publicly-accessible addresses.

ZPE: (Access Everything, Everywhere, Remotely and, (obviously) Securely ... And Then Automate It)

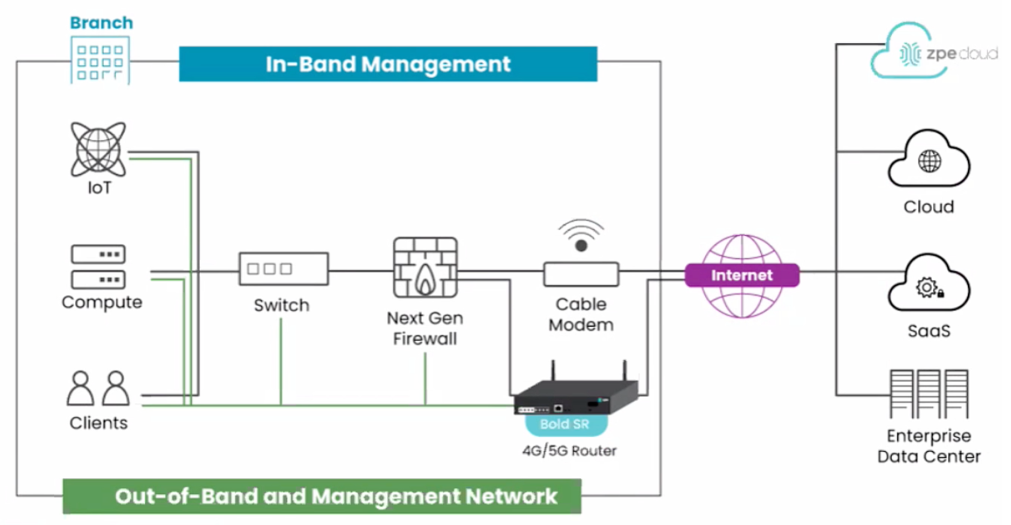

So a private, out-of-band network was (and probably still is, if we're being honest) the most common way to securely manage multiple IPMI ports in a datacenter. This becomes ungainly though, if you have a lot of locations. And this brings us to the modern way of managing IPMI and the like, and to ZPE. ZPE's product line enables you to set up a multitude of these out-of-band networks, wherever you need, and access them all either individually, or through a secure cloud-based portal.

Now, ZPE makes a big deal out of the convenience offered by using ZPE Cloud centralization. All of the big features you can think of that can benefit from centralization are here- and of course, they can be automated.

Things like:

- device setup, upgrade, and patching

- out-of-band access

- access controls

- logging

- monitoring

And since it's in the cloud, you can do this from anywhere- and of course, there's an app for that.

This centralization also applies to the ZPE devices themselves. When you purchase a device and register it to the ZPE Cloud, it is entirely configured from the cloud- not from on-prem. This makes it easy for onsite staff who may not have the time or expertise to configure it. The only thing the customer does is set up the SIM card for 4/5g access, and do the physical wiring so the device can communicate to the IPMI ports.

Once the system is in place, ZPE automation can really help with new system deployments. ZPE maintains a growing database of configuration scripts that can be used from a number of different automation engines (salt, ansible, etc.). These configurations are public source and customizable for your specific use case, but they take into consideration the annoying needs of some hardware setups. Some hardware might require a temporary tftp server, for example- this setup could be scripted, the code loaded and applied, and then the tftp server disabled after the process completes.

Cloud Concerns - ZPE More Privately

The ZPE Cloud is only one way to use the service. There does exist an on-prem/private cloud option for deploying ZPE Cloud. Instead of using the ZPE hosted model, you can host it yourself. Additionally, ZPE has serial-based products that work with NodeGrid locally. These deployment models of course will limit the ZPE-provided features that are available, but it's good to know that there are 'offline' options, should they be needed/required.

Security Considerations

The security of the NodeGrid software that powers these devices has been given a lot of attention. This is understandable, considering the kind of access an attacker would have if the either the NodeGrid device or the ZPE Cloud itself were to be compromised. ZPE built these devices with a Zero Trust philosophy, from the configuration fingerprinting and tamper protection built into the BIOS down to the cloud services that power all that centralization we talked about just above. And this philosophy extends to all aspects of the tools and services that are utilized in order to maximize the functionality of the device. In the video, ZPE uses a delicious looking cheeseburger image/metaphor (skip to about 9m30s if you're impatient) that I won't spoil here. Suffice it to say, they seem to take security extremely seriously, at all levels of their offerings.

The depth of security that you choose to employ on your devices can be intense. The bios can be replaced or reinstalled at will, and the disk is encrypted using TPM and secure boot. If you want to, you can set it up so that if you forget the password and cannot login, the device is permanently useless and would need to be thrown away.

It's also worth noting that ZPE also has a line of hardware sensors that can be used to monitor the physical sanctity/security of the physical environment. These sensors can monitor things like heat, rack/door status (open or closed, etc), airflow, and if there is water detected. These kinds of sensor systems are not new, but as anyone who's ever tried to manage... or automate... or really, do... anything knows, the fewer vendors you have in the mix, the better.

Additional Features That Extend The Device's Use Cases

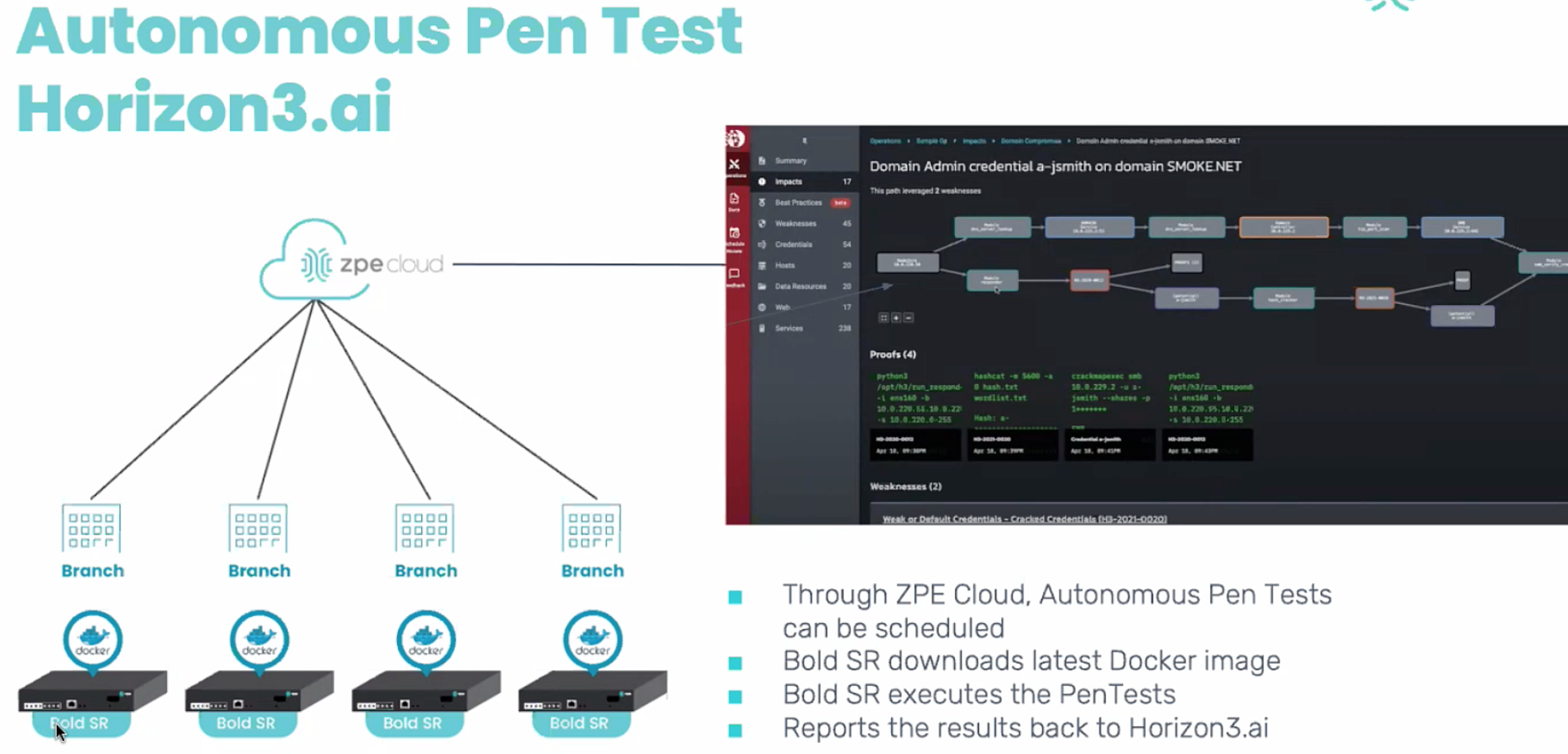

One thing that came up in the XFD7 session that was completely unexpected was the ability to use the ZPE Cloud and local devices to perform autonomous Penetration tests. Much like everything else, this is intended to be as hands-off as possible; you set up the config script, assign it to the appliance(s) you want to use, and then schedule the script. The example that is discussed in the video utilizes horizon3.ai, but basically anything that works with automation will work with the ZPE Cloud. This is a very interesting extension of the product that I think in-house red teams will find quite useful.

Conclusion

It's been a long time since I had to manage hardware in a datacenter, but based on what I've seen from ZPE- I have to say I'm a fan of where they're going. We as an industry have come a long way from the days of rolling a KVM station down datacenter rows. Even for something as sensitive is IPMI, there is no denying the power that comes from centralization. ZPE is a great example of a way to do it securely, while at the same time continuing to add features that make hardware management easier.

And it's really tough to understate the potential of using this out-of-band network as a base for automation. Systems configuration at the BIOS/firmware level can be a dicey proposition, not least because it doesn't have to be done regularly. (Do something once a week? You'll probably have the process memorized. But do it once a year? You're looking up the instruction manual every single time.) Having a vetted and tested script for upgrades will absolutely help system administrators breathe easier when it comes to these important management tasks.