Reinventing the, uh, script, with Github Copilot

GitHub Copilot is a GPT-3 based AI tool that helps programmers write code faster. It definitely has value, but it comes with legitimate caveats that programmers and IT managers alike need to take into consideration before relying on it.

Github Copilot was released in June of 2021 as a technical preview. It remains in technical preview to this day, and has seen constant updates between then and now. I just started messing with it a little bit a few weeks ago, and so far I have to say I am impressed. There will definitely be valid use cases where I will lean in Copilot's direction. But like I said at the top, there are things that need to be kept in mind about how it works before you should commit to it 100%.

How does it work?

Copilot is based on the GPT-3 Artificial Intelligence project from OpenAI. Like all GPT-3 projects, Copilot requires a massive amount of 'completed' work to ingest and analyze. For the GPT-3 projects that write fiction and poetry (which we will side-quest into a little bit below), there is Project Gutenberg, and countless other massive collections of freely available written text. For Copilot, there is a huge amount of publicly accessible code in untold millions of repositories. And since Copilot is a Github project, the Copilot team can access these repos even more directly.

All of that analysis by the Copilot engine happened ages ago, and actually continues to happen in the background as people use the tool. You as an individual programmer (and a Copilot code sample contributor) actually use Copilot, you don't see any of that happening. What you do see is something similar to how Microsoft's O365 Outlook tries to complete your sentence for you.

Copilot is currently only available as a plugin to Microsoft's VSCode program. Once you are accepted into the preview project you simply login to VSCode with your GitHub account, and Copilot can be installed as an extension. Then, you code like normal.

Copilot knows what kind of program you're using by the file suffix as well as the syntax of the code itself. You type a few letters and wait, and Copilot tries to provide a suggestion. The suggestion (if there is one) will appear in a greyed-out italic font that you can accept or ignore. (You can also go into a separate Copilot panel and browse up to 10 alternative suggestions if you don't like the first one Copilot provides.)

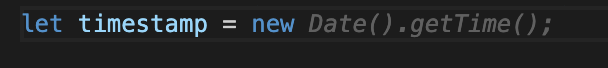

When Copilot has a suggestion, it looks like this:

Pretty simple right? Copilot is basically trying to read ahead. And I have noticed that the farther along you are in a project, the more useful its suggestions become. Lets walk through a simple example, to see this kind of thing in action- and also to highlight our first important caveat.

Using Copilot - A Simple Example

Since I am 1) brand-new to Copilot, and 2) not exactly a crackerjack programmer anyway, I wanted to start with as simple of an example as I could. I decided to use a simple language (bash shell) to solve a simple problem (determining an employee’s pay for a week’s worth of work.)

First Pass: Hours * Pay Rate, Not Even A Tax Taken Out

I will admit that this example is not brand-new to me. I use this all the time when introducing people to programming in general and bash scripting in particular. After all it's logical. You worked X amount of hours, you get Y dollars per hour, multiply them together, you get Z dollars for the week. (In this simple (read:glorious) example even taxes are not a concern.)

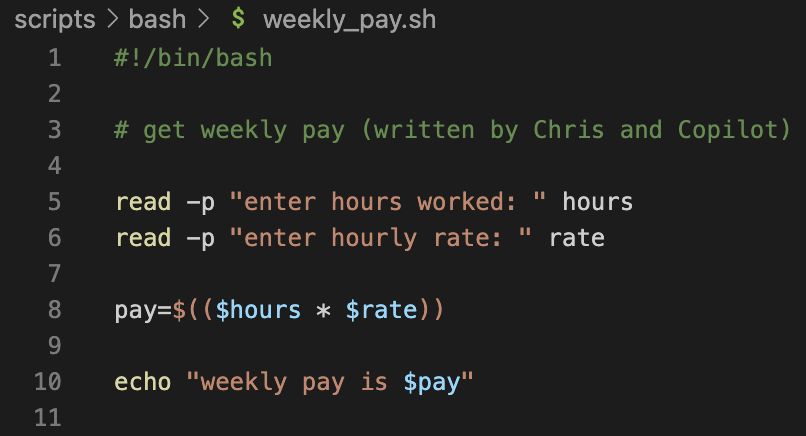

After a false starts, this is what Copilot and I came up with:

Looks good, right? Well, the algorithm is sound, and the math WILL work... sometimes.

Here's the problem: The built-in math that bash uses is integer-only. Meaning whole numbers only. So if someone works 40 hours and gets paid $18 per hour, the script works perfectly, and everyone's happy. But if someone worked 48.5 hours, and gets paid $22.45/hr, now we have a problem. bash will either round the math for you, or (more likely) the script will explode.

This is a very common trap that first time bash scripters fall into. This code is 100% syntactically correct. It passes the smell test, and it passes the shellcheck.net test. But if it were used in production it would be a disaster.

I did that on purpose with the bash script because it was an example that I know well and use often. What would have happened if I had done that in a work environment? What would have happened if I had to do something far out of my comfort zone? Maybe I’m paranoid, but as much as I do like this feature’s potential, this kind of result makes me paranoid. And it brings me to what should be the takeaway from all my blabbering on about Copilot:

You need to know the language you are writing in before you ask Copilot to help.

EVERY language has things like this. I don't even want to call them gotchas, because this was the design. It was always supposed to be like this. In bash, if you want fancy math, you use an external program like bc. In C you initialize a variable with type double instead of int. These are obvious to people who know these languages, but a bare beginner would not know that. And since using the wrong kind of variable (or math engine, in the bash example) is still syntactically correct, the error could go undetected for a while.

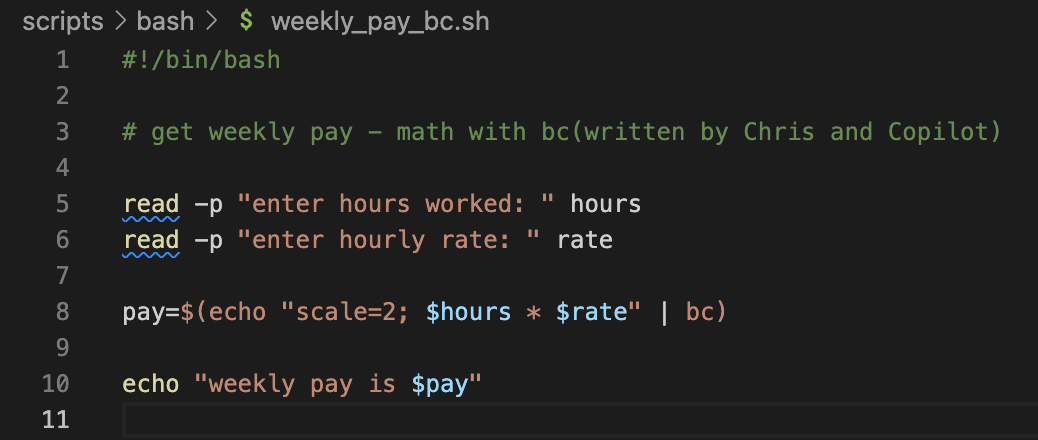

Second Pass: Adding Comments And bc For The Math

Next, I rewrote the script completely with a new name (and also adding more info to the comments in the file). This time through it actually did the math using bc on the appropriate line. This was particularly interesting because the line that uses bc (line 8) is the only one that is different between these two examples.

Again, the only thing that I changed about my approach was the filename, and the slightly more descriptive comment on line 3. I gave it a keyword, and at the danger zone line, Copilot pivoted and used the “correct” solution (which lined up precisely with that keyword). This is fascinating to me, and really does point to the power and potential that Copilot has.

Third Pass: Pushing It Closer To Real-World Usability

I will admit- I didn't document every step here. What I wanted to do was make a 'real' script. One that someone might legitimately use. I added , basic error checking, command-line arguments, and a huge of comments, with Copilot helping me every step of the way. Everything checks out. It used a looping method of checking the command line arguments that I’ve definitely seen a million times before on Stack Overflow, but Copilot correctly customized it for my use case. And more importantly, nailed the correct syntax the first time through- something I will admit that I don't always do without an example to work from.) For the commandline options, Copilot even used a -t to indicate hours. It was smart enough to notice that the -h flag was already being used for "help."

This example is quite a bit longer than the others, so screenshot it here, but for your amusement, here is the full, finished, Chris+Copilot produced, weekly pay script.

Initial Conclusions From This Weekly Pay Exercise

Familiarity is going to be key- and by that I mean familiarity with the language you are writing in as well as familiarity with Copilot itself. There’s no denying that Copilot helped me write these scripts faster, though I still had to confirm a few things and correct some errors (for example Copilot didn’t do the optstring 100% right- you as the programmer need to know which flags require an argument. At least in my experience here.. Copilot won't). That is another error that would 100% pass a syntax scanner, but could have unanticipated consequences down the line.

And that’s my concern. I believe that this worked because I already knew what I was looking for. I was 75% there in my head, before ever touching a keyboard. There's no question that this was a faster endeavor because I was using Copilot, but I worry about the end result if I had only been 25% there (or a rank beginner).

BONUS: Chris's Criticisms of AI-Produced Content In General

We already have tons of websites that utilize gpt-3 AI/ML models in order to generate web content and blog posts, and while they’re grammatically, uh, readable? They’re still obviously machine generated and generally garbage. They are also crap at writing long-form. A quick paragraph? Sure no problem.

Here’s a prompt fed to just such a model: “Write a short article about the pros and cons of machine-generated website content.”

And here’s the result:

Are you considering using machine-generated website content for your business? Here are some pros and cons to help you decide.

Pro: Machine-generated website content is fast and easy to produce.

Con: Machine-generated website content can be low quality and may not be what your customers are looking for.

Pro: Machine-generated website content can help you increase your web traffic and SEO ranking.

Con: Machine-generated website content may not be accurate or up-to-date.

The bottom line is that machine-generated website content has both pros and cons, so you need to decide whether the pros outweigh the cons for your particular business.

I mean, as far as the written word goes, this is... fine. The points it raises are technically accurate. It even incorporating CYA weasel-words like ‘can’ and ‘may not be.' But it’s also 100 words long, most of which are an introduction, and a redundant and unhelpful conclusion. It probably sounds like something you have already read on some other anonymous blog (which, spoiler alert: you have. A shocking amount of mass-produced generic blogs get their content exactly like this.)

We don’t have time to do longer examples, but they exist. Mostly the AI excels in weirdness, or drawing crazy connections. Witness just about any longform published GPT-3 work. Most of them are poetry, and you'll not that most of them are also all co-written, and/or human-edited.

The main problems with GPT-3 revolve around that pesky consciousness thing, which, or course AI doesn’t have. It’s just math. It is deeply dependent on previous examples and the training text, which means that it’s regurgitation and random combinations. It’s the very definition of a million monkeys with a million typewriters.

This also explains why it falls on its face when trying to tell a coherent story. AI doesn't keep an 'idea' in mind... it's just math. AI has no idea of the meaning of its inputs, and it has no idea of the meaning of its outputs. It can’t make natural connections, or advance ideas. It doesn't hurt that humans love incongruity. It’s in our nature to celebrate zaniness for its own sake, which causes us to over-credit and overrate what the AI spits out. (Having said that, I will admit that the Batman comic written by AI is zany AND funny.)